White Paper

Enhancing MIL-HDBK-217 Reliability Predictions with Physics of Failure Methods

ByJames G. McLeish, CRE

The Defense Standardization Program Office (DSPO) of the U.S. Department of Defense’s (DoD) has initiated a multiphase effort to update MIL-HDBK-217 (217), the military’s often imitated and frequently criticized reliability prediction bible for electronics equipment. This document, based on field data fitted empirical models, has not been updated since 1995. The lack of updates led to expectations that its statistically-based empirical approach would be phased out.Especially after science-based Physics of Failure (PoF) (i.e. reliability physics) research led Gilbert F. Decker, Assistant Secretary of the Army for Research, Development and Acquisition to declare that MIL-HDBK-217 was not to appear in Army RFP acquisition requirements as it had been “shown to be unreliable and its use can lead to erroneous and misleading reliability predictions”.

Despite such criticism, MIL-HDBK-217 is now being updated as part of the recent climate within the DoD to reembrace Reliability, Availability, Maintainability, and Safety (RAMS) methods.2, 3 This paper reviews the reason for the document’s revival and update along with the primary concerns over its shortcomings.

The team working to update MIL-HDBK-217 developed a proposal for resolving the limitations with empirical reliability prediction. A hybrid approach was developed where improved and more holistic empirical MTBF models would be used for comparison evaluations during a program’s acquisition-supplier selection activities. Later, science-based PoF reliability modeling combined with probabilistic mechanics techniques are proposed for use during the actual system design-development phase to evaluate and optimize stress and wearout limitations of a design in order to foster the creation of highly reliable, robust electrical/electronic (E/E) systems.

将这种方法纳入一个提案future Revision H of MIL-HDBK-217 has been submitted to the DoD- DSPO where plans for implementing and funding this proposal are now being considered. This paper reviews the concepts on how PoF methods can coexist with empirical prediction techniques in MIL-HDBK-217. This is discussed from the point of view of a member of the MIL-HDBK-217 revision team. The author wishes to thank the leaders and team member on the 217 workgroup for their contributions.This paper was motivated by numerous requests from start- ups and established companies for advice on solder joint design, acceptable material combinations, and reflow process development.

OVERVIEW OF MIL-HDBK-217 - RELIABILITY PREDICTION OF ELECTRONIC EQUIPMENT

The two empirical reliability predictions methods defined in MIL-HDBK-217’s known as “part count” and “part stress” are used to estimate the average life of electronic equipment in terms of their mean time between failures (MTBF) which is the inverse of the failure rate λ (Lambda). In the part count method the MTBF value is determined by taking the inverse of the sum of the failure rates (from generic tables) for each component in an electronic device (see equation below).

These basic failure rates can then be scaled to account for the average increase in failure rate caused by operating under harsh environmental conditions such as; ground mobile, naval, airborne, missile, space, etc. MIL-HDBK-217 recognizes 14 different generalized generic environment conditions. The part stress method provides additional generic scaling factors intended to account for the reliability degradation effects of usage stresses such as power, voltage, and temperature. The stress factors cannot be used for a prediction until the program has matured to the point that these stresses can be quantified by using circuit simulation tools or parametric measurements from functional design prototypes. Stress factors may be used earlier in the design process through component derating guidelines, which establish stress rules for components in a particular circuit application.

MIL-HDBK-217 CONCERNS

There are numerous concerns about the empirical reliability prediction methods defined in MIL-HDBK-217. A summary of the primary criticisms which have been covered thoroughly in other publications4, 5 are:

- The handbook’s reliability predictions are based solely on constant failure rates which are meant to model only random failure situations. Constant failure rates are used because they simplify failure data collection and calculations, which were a necessity back in the precomputerized world of the 1950s and 1960s when these prediction methods were first developed. When failure trends are modeled as only random events via the exponential distribution, early-stage failure and wearout related failures are not accounted for. Tabulation errors where early-stage failures and wearout issues are tallied as random failures are another risk of this scheme. Later significant errors can occur when reliability predictions are made using the exponential distribution with contaminated (mixed failure mode) base data. Such inaccuracies are inappropriate, and can misdirect reliability improvement effort away from more effective quality and durability improvements activities.

- Empirical reliability predictions typically correlate poorly to actual field performance since they do not account for the physics or mechanics of failure. Hence, they can not provide insight for controlling actual failure mechanisms and they are incapable of evaluating new technologies that lack a field history to base projections on.

- The models are based upon industry wide average failure rates that are not vendor, device nor event specific.

- The MTBF results provide no insight on the starting point growth rate and distribution range of true failure trends. Also the MTBF concept is often misinterpreted by people without formal reliability training.

- Over emphasis on the Arrhenius model and steady state temperature as the primary factor in electronic component failure while the roles of key stress factors such as: temperature cycling, humidity, vibration and shock have not been individually modeled6, 7, 8.

- Over emphasis on component failures despite Reliability Information Analysis Center (RIAC) data that shows that at least 78% of electronic failures are due to other issues that are not modeled such as: design errors, printed circuit board (PCB) assembly defects, solder and wiring interconnect failures, PCB insulation resistance and via failures, software errors, etc.9

- 过去的217更新是在1995年;新的组件,echnology advancement and quality improvements since then are not covered. It is grossly out of date. For example, the microcircuit model was last updated in 1992 and the data used to develop the model was based on parts manufactured on or before 1991, the majority of this data is from the 1980’s.10 The connector model dates back to 1985 using data that was 20 years old.5

- The 217 handbook needs to be kept up to date with regularly scheduled releases of new data. This is an enormous task that is further complicated with the creation of each new device and component family that needs to be tracked. This maintenance effort is underfunded, the current 217 data is over 15 years out of date and it is unable to deal with today’s continuous quality/reliability improvement processes that rapidly make components more reliable.

THE MIL-HDBK-217 UPDATE EFFORT

The MIL-HDBK-217 Revision G update is authorized under DoD Acquisition Streamlining and Standardization Information System (ASSIST) Project # SESS-2008-001, a DoD-DPSO initiative. The Naval Surface Warfare Center (NSWC) Crane Division is managing the project. The 217 Rev G Work Group (217WG) kickoff meeting was held on May 8th, 2008 in Indianapolis, IN].11 It was attended by 23 administrators and reliability professionals from the Navy, the Air Force, defense contractors, RAMS software providers, consultants and test labs. The Army, which no longer uses 217, did not participate. The project’s objectives were to:

-

- Refresh the data for today’s electronic part technologies.

- Not to produce a new reliability prediction approach. Models could be reviewed and modified if needed, but should generally remain intact.

- To continue to look and work the same so reliability engineers would not have to learn a new tool.

- To continue to be a paper document despite the obvious need for a web based, living electronic failure rate database essential for staying up to date with the rapid, continuous advancements in electronics.

- Contrary to past revision efforts when universities and research organizations were contracted to make the revisions, Rev G would be performed on a shoestring budget relying on volunteers to support the effort.

The objective of the Rev G project was not to develop a better, more accurate reliability prediction tool or to produce more reliable systems. The actual goal was to return to a common and consistent method for estimating the inherent reliability of an eventually mature design during acquisition so that competitive designs could be evaluated by a common process. To support this position, data from a NSWC Crane survey was cited showing that the majority of respondents use 217 for reliability prediction and that they wanted to continue to use it in its current format. The next most frequently used methods were PRISM, 217 PLUS, and Telcordia SR-332.

The 217 Rev F failure rate data and models are a frozen snapshot of conditions from over 15 years ago that are well out of date. Many organizations attempted to improve their reliability estimates by using modified or alternative prediction methods. These varied from using the 217 models with their own component failure rate data to using alternative empirical models such as SR-332, the European FIDES method, or the RAC PRISM (later renamed RIAC217-PLUS) method, as well as PoF techniques. These efforts to make better predictions and more reliable systems were encouraged by many reliability professionals. However, the diversity made it difficult for acquisition personnel and program managers to evaluate contractors and their products.

Concerns were raised at the kickoff meeting that a survey of current users of empirical prediction methods failed to capture the views of those who had stopped using them. There was a considerable amount of discussion that the reasons for dissatisfaction, discontinued use and the diverse, individual efforts to find better methods should be considered equally with acquisition needs when defining the goals for updating 217 for the first time in many years. It was felt that the high survey ranking of MIL-HDBK-217 empirical methods was partly due to the lack of an effort to develop and sanction a better method to replace empirical reliability prediction methodologies.

A concept for starting a second effort to develop better reliability prediction methods after the 217 Rev G effort was proposed. However the discussions at the kickoff meeting led to accelerating this proposal and expanding the project into multiple phases. The original Rev G effort to update the current failure rate models and data would continue as the Phase I effort.

A Phase II task was created to research and define a proposal for an improved reliability prediction methodology and the best means to implement it. Upon acceptance of the Phase II plan, a Phase III effort would later be created to implement the Phase II plan, which would become MIL-HDBK-217-Rev. H.

PHASE II FINDINGS

In researching alternative reliability prediction methods, the 217WG leveraged a Quality Function Deployment (QFD) approach using data collected by Aerospace Vehicle Systems Institute (AVSI) AFE 70 reliability framework and roadmap project which compiled and documented the needs of potential users and correlated them into functions and tasks for achieving the objective. QFD is a widely used tool to help project teams sort out, identify and prioritize the requirements for complex issues in order to create new product or services that incorporate key quality characteristics from the viewpoint of potential customers or end users. The results are documented in a matrix format known as the house of quality.

The QFD analysis identified that a more holistic approach to reliability prediction was needed that could more accurately evaluate the risks of specific issues in addition to overall reliability. Also needed was a way to evaluate the time to first failure in addition to MTBF and a way to deal with the constant emergence of new technologies that did not require years of field performance before reliability predictions could be made. After considerable evaluation, the Phase II team converged on two basic approaches: 1) to improve the empirical reliability predictions approach and 2) to embrace and standardize the science-based PoF approach where cause and effects deterministic relationships are analyzed using fundamental engineering principles.

After further deliberation over the strengths and weaknesses of each approach, it became evident that neither method could resolve all reliability predictions issues to satisfy the needs of every user group. Eventually it became evident that a two-part hybrid approach should be considered.

An updated and improved empirical approach based on the RIAC 217 PLUS methodology was proposed to provide preliminary module- or system-level reliability estimates based on historical component failure rates. This approach would support acquisition comparison and program management activities during the early stages of an acquisition program.

The proposed second part would define PoF modeling for use during the actual engineering design and development phases of a program. These methods would be used to assess the susceptibility and durability of design alternatives to various failure mechanisms under the intended usage profile and application environment. In this way items that lacked the required durability and reliability required for an application could be screened out early at low cost during the design phase resulting in more reliable military hardware and systems. Since the 217 PLUS approach has been well defined in other publications,12 the rest of this paper will provide an overview to the PoF approach proposed for 217 Rev H.

PHYSICS OF FAILURE BASICS

The physics of failure (also known as the Reliability Physics) approach applies analysis early in the design process to predict the reliability and durability of design alternatives in specific applications. This enables designers to make design and manufacturing choices that minimize failure opportunities, which produces reliability-optimized, robust products. PoF focuses on understanding the cause and effect of physical processes and mechanisms that cause degradation and failure of materials and components. It is based in the analysis of loads and stresses in an application and evaluating the ability of materials to endure them from a strength and mechanics of material point of view. This approach integrates reliability into the design process via a science-based process for evaluating materials, structures and technologies.

这些技术被称为load-to-strength interference analysis have been used for centuries. They are a basic part of mechanical, structural, construction and civil engineering processes. Unfortunately during the early development and evolution of E/E technology in the 1950s and 1960s, this approach was not used since electrical engineers were not trained in or familiar with structural analysis techniques and the miniaturization of electronics had not yet reached the point where structural and strength optimization was required. Also as with any new technology, the reasons for failures were not initially well understood. Research into E/E failures was slow and difficult because unlike mechanical and structural items most E/E failures are not obvious. Evaluating and learning about new E/E failures was more difficult because they are not readily apparent to the naked eye since most components are microscopic and electrons are not visible.

Due to these difficulties, empirical probabilistic reliability methods were adapted instead and became so entrenched that the development of better alternatives were stifled.

Over the last 25 years, great progress has been made in PoF modeling and the characterization of E/E material properties. By adapting the techniques of mechanical and structural engineering, computerized durability simulations of E/E devices using deterministic physics and chemistry models are now possible and becoming more practical and cost effective every year. Failure analysis research has led PoF methods to be organized around three generic root cause failure categories which are: errors and excessive variation, wearout mechanisms and overstress mechanisms.

OVERSTRESS FAILURES

Overstress failures such as yield, buckling and electrical surges occur when the stresses of the application rapidly or greatly exceeds the strength or capabilities of a device’s materials. This causes immediate or imminent failures. In items that are well designed for the loads in their application, overstress failures are rare and random. They occur only under conditions that are beyond the design intent of the device, such as acts of god or war, such as being struck by lightning or submerged in a flood. Overstress is the PoF engineering view of random failures from traditional reliability theory. If overstress failures occur frequently, then either the device was not suited for the application or the range of application stresses used by the designer were underestimated. PoF load-stress analysis is used to determine the strength limits of a design for stresses like shock and electrical transients and to assess if they are adequate.

WEAROUT FAILURES

Wearout in PoF is defined as stress driven damage accumulation of materials which covers failure mechanisms like fatigue and corrosion. Numerous methods for stress analysis in structural materials have been developed by mechanical engineers. These techniques are readily adapted to the microstructures of electronics once material properties have been characterized. PoF wearout analysis does more than estimate the mean time to wearout failures for an assembly. It identifies the most likely components or features in a device to fail 1st, 2nd, 3rd, etc, along with their times to first failure and their related fall-out rates afterwards, for various wearout mechanisms. This enables designers to determine which (if any) items are prone to various types of wearout during the intended service life of a new product. The design can then be optimized until susceptibility to wearout risks during the desired service life is designed out.

ERRORS AND EXCESSIVE VARIATION RELATED FAILURES

Errors and excessive variation issues comprise the PoF view of the traditional concept of early-stage failure. Opportunities for error and variation touch every aspect of design, supply chain and manufacturing processes. These types of failures are the most diverse and challenging category. Since diverse, random, stochastic events are involved, these types of failures can not be modeled or predicted using a deterministic PoF cause-and-effect approach. However, reliability improvements are still possible when PoF knowledge and lessons learned are used to evaluate and select manufacturing processes that are proven to be capable, ensure robustness and implement error proofing.

INTEGRATING POF INTO MIL-HDBK-217

The 217WG developed a dual approach for integrating PoF overstress and wearout analysis into 217 Rev. H alongside improved empirical prediction methods. One proposed PoF section addresses electronic component issues while the second deals with circuit card assembly (CCA) issues. These sections are meant to serve as a guide to the types of PoF models and methods that exist for reliability assessments.

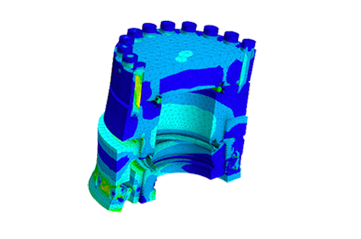

物理组件失败的方法

The proposed PoF component section focuses on the failure mechanisms and reliability aspects of semiconductor dies, microcircuit packaging, interconnects and wearout mechanisms of components such as capacitors. A current key industry concern is the expected reduction in lifetime reliability due to the scaling reduction of integrated circuit (IC) die features that have reached nanoscale levels of 90, 65 and 45 nanometers (nm).13 Models that evaluate IC failure mechanisms such as time dependent dielectric breakdown, electromigration, hot carrier injection and negative bias temperature instability are being considered to address this concern.14

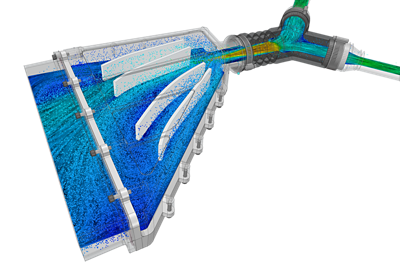

PHYSICS OF FAILURE METHODS FOR CIRCUIT CARD ASSEMBLIES

The proposed PoF circuit card assembly section defines 4 categories of analysis techniques (see Figure 2 at the end of this paper) that can be performed with currently available computer aided engineering (CAE) software. A probabilistic mechanics approach is used to account for variation issues.15 This methodology is aligned with the Analysis, Modeling and Simulations methods recommended in Section 8 of SAE J1211 - Handbook for Robustness Validation of Automotive Electrical/Electronic Modules.16 The 4 categories are:

- E/E Performance and Variation Modeling used to evaluate if stable E/E circuit performance objectives are achieved under static and dynamic conditions that include tolerancing and drift concerns.

- Electromagnetic Compatibility (EMC) and Signal Integrity Analysis to evaluate if a CCA generates or is susceptible to disruptions by electromagnetic interference and if the transfer of high frequency signals are stable.

- Stress Analysis is used to assess the ability of a CCA’s physical packaging to maintain structural and circuit interconnection integrity, maintain a suitable environment for E/E circuits to function reliably and determine if the CCA is susceptible to overstress failures.17

- Wearout Durability and Reliability Modeling uses the results of the stress analysis to predict the long-term stress aging/stress endurance, gradual degradation and wearout capabilities of a CCA.17 Results are provided in terms of time to first failure, the expected failure distribution in an ordered list of 1st, 2nd, 3rd, etc. devices, features, mechanisms and sites of mostly likely expected failures.

IMPLEMENTATION CONCEPTS

Each of the 4 groups contains analysis tasks that use similar analytical skills and tools. Combined, these techniques provide a multidiscipline virtual engineering prototyping process for finding design weaknesses, susceptibilities to failure mechanisms and for predicting reliability early in the design when improvements can be implemented at low costs.

Most of these modeling techniques require specialized modeling skills and experience with CAE software. It is not expected that reliability engineers would personally learn and perform these tasks. However, the definition and recognition of PoF methods as integral, accepted reliability methods for creating robust and highly reliable systems is expected to help connect reliability professionals with design engineers and help integrate reliability by design concepts into design activities.

The PoF sections are not intended to mandate that every model has to be applied to every item in every design or that modeling is limited to only the listed models since new models are constantly being developed. Furthermore, the list is not all inclusive since PoF models for every issue do not yet exist. The goal is to identify existing evaluation methods that can be selected as needed during design and development activities to mitigate reliability risks. This way, more reliability growth can occur faster and at lower costs, in a virtual environment during a project’s design phase.

By establishing a roadmap for merging fundamental engineer analysis and reliability methods, a technology infrastructure can be encouraged to continue to grow (perhaps faster) to provide more tools and methods for reliability engineers and product design teams to use in unison.

For more information, and to request a quote from our Ansys Reliability Engineering Services Team, visit:https://upl.inc/a5b0679